Quality Initiatives Deserve Better from Industry 4.0

Improved quality and lower quality costs are not top drivers of industrial transformation programs. Quality leaders are largely absent from high-level planning of industrial transformation initiatives, and digital transformation projects tend to miss the boat on quality—focusing instead on improved efficiency, faster delivery, and higher variety. Without a quality voice at the table, industrial transformation programs miss one of the main quality benefits: Predicting quality to prevent defects.

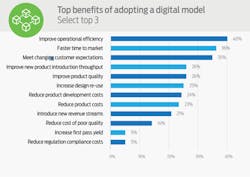

In a recent LNS research report, on average less than 50% of organizations with an ongoing industrial transformation initiative had a quality leader on the team. Results from industrial transformation programs are also light on quality. A Forbes report on “100 Stats on Digital Transformation and Customer Experience”, shows the top three benefits reported are improved operational efficiency, faster time to market and ability to meet changing customer expectations.

While quality is certainly wrapped up in meeting changing customer expectations and efficiency, the chart below in Figure 1 shows quality reasons to be well down the list of impacts seen.

Quality programs historically have used tools and strategies including measurement sampling, failure modes and effects analysis, control plans and statistical process control to create a people-based network to prevent and detect defects. The challenge for quality leaders in the digital age is to translate the people network to manufacturing technology.

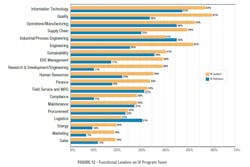

Industrial transformation efforts historically under-represent operations and quality functions, even though the factory is the place where the change is felt most dramatically. Plants are seldom the focus in either defining or executing the industrial transformation program despite being the site of industrial operations. The chart below shows this issue. Even though near the top of the chart, quality is still represented on less than 50% of industrial transformation teams, and operations leadership is an even smaller percentage. Some progress has been made over the past few years but not nearly enough.

The answer here is that quality leaders need a seat at the table and a voice in the development and design of industrial transformation solutions.

So why aren’t they at the table?

It is difficult to see how digital solutions can solve real quality problems. How can quality leaders become acquainted with digital technology and apply it to the manufacturing quality situation? It is a challenge for sure. Many software products are positioned as a digital transformation solution. A quick tour of the sponsor floor at a manufacturing and technology show reveals several that are not intuitive as digital transformation, at least not in the manufacturing space. This means there is a wide, inclusive definition of what constitutes a digital transformation solution, making it difficult for the novice to discern a solution that works for their situation. The way to resolve this issue is to flip the order of operations around and start with the problem that needs to be solved, then search for potential solutions to fit that business problem.

A continuous process manufacturer implemented a digital transformation pilot initiative architected and led by the quality leader. The initiative included product quality as a key aspect of success for the program. This company had unacceptable levels of product quality complaints and defects, so digital product monitoring was implemented and integrated into the platform as part of the project. The digital monitoring tools were tied to important aspects of product quality analyzed from customer complaints and scrap reporting. The result was a 50% reduction in defects in the pilot phase of the project.

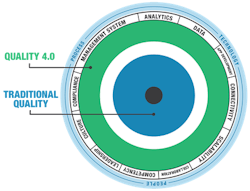

Figure 3 , from LNS Research shows Quality 4.0 is the same as “traditional” quality approaches, just with better and faster analysis and decision-making and a more connected environment. This definition of Quality 4.0 misses a key potential for the quality leader—prevention through prediction.

The main mission of the quality function is to construct systems and methods that prevent defects. We must absolutely prevent defects from escaping to the customer, so we put building blocks in place that give us a warning of impending defects possibly occurring. Control plans are an assemblage of checkpoints that should give a good outcome if all prescriptions are followed. Statistical process control incorporates a rule set to give us early warning of an impending defect. Sampling plans attempt to defeat patterns and give some level of assurance to our decision-making about the accept/reject decision.

Old Habits Die Hard

Until now, the weak point in this system has been the person. Getting people to perform consistently is notoriously difficult. Everyone occasionally has a bad day, wants time off or is distracted by home or family issues or conflicts with co-workers. An inspector, on their good day is only 80% effective, at best.

The digital age requires out-of-box thinking, but old habits die hard. I visited an agricultural products factory in California in 2019. This factory, (and in fact the entire company) had been on a home-grown digitalization journey for years. The production lines were heavily digitalized. The quality testing function was digitalized and semi-automated but not connected.

In reviewing the progress they had made on digitalization, I discovered that the quality function was behaving in exactly the same way as pre-digitalization: they were doing the same tests, with the same frequency, with the same level of understanding of what in the process led to a good or a poor quality outcome. There had been no change in the quality function along that entire digitalization journey, even the sampling frequency was the same.

All the tools and approaches that are a standard part of the effort to prevent defects are based in the past. We sample products after they are produced, we collect data and plot points on a control chart after the data exist, sometimes well after. We apply statistical process control rules to previously generated data to give early warning of patterns that may lead to defects. All of the elements of our strategy for preventing defects are looking backwards in time, after the defect has been created.

We are trying to drive the car by looking in the rear-view mirror.

Being Proactive

The digital age gives us a unique opportunity to shift our focus from being strictly reactive to proactively managing events and preventing issues from happening in the first place.

The opportunity for the future of quality in the digital age is in the development of the predictive power of a fully characterized process and its quality results. The best representative for this mindset is the control plan. The control plan represents what is known in Six Sigma as the transfer function (Y=f(x)). The transfer function is the formula of process variables that need to be controlled to achieve a good result.

To be effective, the control plan should consist only of the most essential items to achieve a good product, not the things that are easiest or most convenient to measure. Review the control plan with the following question in mind: “Are these elements actually serving as a proxy for something that is unmeasurable in the old paradigm?”

If control-plan elements are proxies for what really should be controlled, now is the opportunity to correct that using digital technology. For example, if the control plan lists an inspection point for cooling tank temperature, that measurement is really a proxy for product temperature—one step removed from the actual variable that matters. It was the best we could do at the time, so we went with it. In our example, product temperature is a key control for a couple of different quality characteristics of the final product. However, if we could employ a laser thermometer to constantly measure the product temperature at many points in the manufacturing process, and report that data back to a machine learning system, now we have meaningful data directly from the variable that matters to a quality outcome.

The digital age gives us the unique opportunity to redefine what is possible, to connect the dots from product and process quality to the real control variables for achieving a good outcome. Over time, as the “library” of process models is populated and utilized, those models will improve and become more reliable, trust increases in the machine learning system to give proper indications. Then, what was previously inconceivable is now possible; a quality prediction that leads ultimately to true prevention. Predictive quality will be at least 10 times less expensive than current prevention methods, just as decades-old and widely accepted predictive maintenance practices have proven to be.

James Wells is principal consultant at Quality in Practice, a consulting and training practice specializing in continuous improvement programs, and specializes in quality fundamentals, including the application of digital solutions to common manufacturing challenges. He has led quality and continuous improvement organizations for over 20 years at various manufacturing companies. Wells is a certified master Black Belt and certified lean specialist.