To Find New Innovations, Stop Researching the Same Old Stuff

By Noah Smith

In a recent Forbes article, astronomer and writer Ethan Siegel called for a big new particle collider. His reasoning was unusual. Typically, particle colliders are created to test theories — physicists' math shows that undiscovered particles ought to exist, and experimentalists use colliders to see whether they really do. This was the case with the Large Hadron Collider, which was built in Europe with the express purpose of detecting the elusive Higgs boson. It succeeded at that task, earning a Nobel Prize for the theorists who first predicted the particle.

But particle physics is running out of theories to test. The Higgs discovery puts the capstone on the so-called standard model of particle physics. Assuming the Hadron Collider doesn't pop out any completely unexpected new particles — which it has so far shown no sign of doing — it leaves theoretical physicists with nowhere to go. Siegel argues that an even bigger (and much more expensive) collider should be built on the chance that it discovers some as-yet-undreamt-of new phenomena. But fortunately governments seem unlikely to shell out the tens of billions of dollars required, based on nothing more than blind hope that interesting things will appear.

Particle physicists have referred to this seeming dead-end as a nightmare scenario. But it illustrates a deep problem with modern science. Too often, scientists expect to do bigger, more expensive versions of the research that worked before. Instead, what society often needs is for researchers to strike out in entirely new directions.

During the past few decades, a disturbing trend has emerged in many scientific fields. The number of researchers required to generate new discoveries has steadily risen:

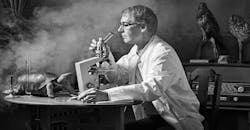

This doesn't mean the research is no longer worth doing. But it does suggest that specific scientific and technical fields yield diminishing marginal returns. In the 1800s, a Catholic monk named Gregor Mendel was able to discover some of the most fundamental concepts of genetic inheritance by growing pea plants. In the 1960s, a handful of scientists at university labs discerned the basic structure of DNA. A few decades later, a large team of scientists sequenced the entire human genome for a little less than $3 billion. Now biotech venture capital spends more than that in a single year — one can receive hundreds of millions of dollars to discover narrow applications of the grand ideas that began in Mendel's modest garden.

If scientific fields are like veins of ore — where the richest and most accessible portions tend to get mined out first — how does technology keep advancing? Partly by throwing more money at the problem, but also by discovering new veins. The universe of scientific fields isn't fixed. Today, artificial intelligence is an enormously promising and rapidly progressing area, but back in 1956, when a key early conference on the topic was held, it was barely a glimmer in researchers' eyes.To keep rapid progress going, it makes sense to look for new veins of scientific discovery. Of course, there's a limit to how fast that process can be forced — it wasn't until computers became sufficiently powerful, and data sets sufficiently big, that AI really took off. But the way that scientists now are trained and hired seems to discourage them from striking off in bold new directions.

Scientists train to work in specific fields — a physics major studies to become a particle physicist, and gains experience by working under senior, more established particle physicists. They are allowed some leeway to strike out on their own, but their skills and specialized knowledge — what economists call human capital — are oriented toward extending and continuing the work of their advisers. In other words, they get pointed toward working in areas of diminishing returns. This means that as projects like the Hadron Collider require ever-more particle physicists, ever-more researchers are trained to become particle physicists, perpetuating the cycle.

Not only does this process direct researchers away from novelty, but it ignores society's most pressing needs. Discovering the Higgs boson, or spinning theories about the origin of the universe, helps to satisfy human curiosity and our sense of wonder, but it doesn't do much to generate useful technologies in the short term. With climate change a looming crisis, the need to discover sustainable energy technology — especially better power storage — rivals the danger faced by the U.S. in World War II, when many of the country's best physicists were called on to drop their research and join the Manhattan Project. Plumbing the secrets of the cosmos is a good thing, but at present there are much more important areas that demand the talents of the world's most brilliant scientists.

Science thus needs less iteration and more reallocation. Researchers should be prompted to get exposure to a wider array of mentors. They also should be given more leeway to focus on their own ideas. Some sciences might even take inspiration from the field of economics, where — for better or worse — methodological novelty tends to be rewarded above all else.

Granting agencies also can do their part. Giving more money to cheap, highly novel projects — and to less established labs and researchers — can help reorient science toward finding new areas of knowledge. A model might be the Defense Advanced Research Projects Agency (DARPA), renowned for its pursuit of cheap, fast breakthroughs with ad-hoc teams of researchers pulled from a variety of universities and labs.

So what science needs isn't an even bigger particle collider; it needs something that scientists haven't thought of yet.